Q: What is the check point in machine learning & deep learning?

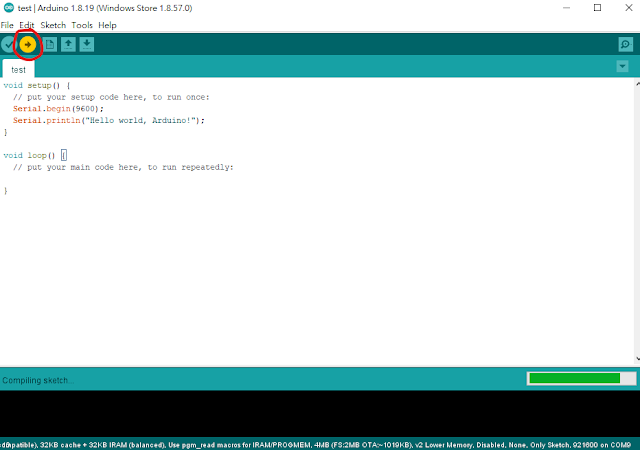

A: It is used to preserve the temporary models during training.

With the development of large language models (LLMs), models are becoming increasingly larger. As a result, research on utilizing model checkpoints has become important. Some machine learning experts are investigating methods to resume checkpoint models from interrupted training progress.

Checkpoint (檢查站/關口)在深度學習的領域,是指訓練過程中所保存的模型。

隨著大型語言模型(Large Language Model, LLM)的發展,現在的模型越來越大,因此Checkpoint的保留有其重要性,有學者在研究訓練中斷後如何重新從Checkpoint繼續先前未完成的訓練。

References:

Machine Learning Checkpoinging (deepchecks)

Resume Training from Checkpoint Network (Matlab)

Rojas, E., Kahira, A. N., Meneses, E., Gomez, L. B., & Badia, R. M. (2020). A study of checkpointing in large scale training of deep neural networks. arXiv preprint arXiv:2012.00825.

Xiang, L., Lu, X., Zhang, R., & Hu, Z. (2024, May). SSDC: A Scalable Sparse Differential Checkpoint for Large-scale Deep Recommendation Models. In 2024 IEEE International Symposium on Circuits and Systems (ISCAS) (pp. 1-5). IEEE.